Screen scraping is normally associated with the programmatic collection of visual data from a source, instead of parsing data as in Web scraping. Originally, screen scraping referred to the practice of reading text data from a computer display terminal’s screen. This was generally done by reading the terminal’s memory through its auxiliary port, or by connecting the terminal output port of one computer system to an input port on another. The term screen scraping is also commonly used to refer to the bidirectional exchange of data. This could be the simple cases where the controlling program navigates through the user interface, or more complex scenarios where the controlling program is entering data into an interface meant to be used by a human. More modern screen scraping techniques include capturing the bitmap data from the screen and running it through an OCR engine, or for some specialized automated testing systems, matching the screen’s bitmap data against expected results. This can be combined in the case of GUI applications, with querying the graphical controls by pro-grammatically obtaining references to their underlying programming objects.

Blog

Data Scraping

Data transfer between programs is accomplished using data structures suited for automated processing by computers, not people. Such interchange formats and protocols are typically rigidly structured, well-documented, easily parsed, and keep ambiguity to a minimum. Very often, these transmissions are not human-readable at all. Thus, the key element that distinguishes data scraping from regular parsing is that the output being scraped was intended for display to an end-user, rather than as input to another program, and is therefore usually neither documented nor structured for convenient parsing. Data scraping often involves ignoring binary data, display formatting, redundant labels, superfluous commentary, and other information which is either irrelevant or hinders automated processing. Data scraping is most often done to either interface to a legacy system which has no other mechanism which is compatible with current hardware, or to interface to a third-party system which does not provide a more convenient API. In the second case, the operator of the third-party system will often see screen scraping as unwanted, due to reasons such as increased system load, the loss of advertisement revenue, or the loss of control of the information content. Data scraping is generally considered an ad-hoc, inelegant technique, often used only as a “last resort” when no other mechanism for data interchange is available. Aside from the higher programming and processing overhead, output displays intended for human consumption often change structure frequently. Humans can cope with this easily, but a computer program may report nonsense, have been told to read data in a particular format or from a particular place, and with no knowledge of how to check its results for validity.

Web Scraping

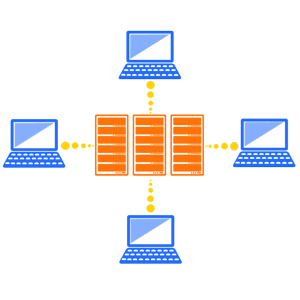

Web scraping is a computer software technique of extracting information from websites. Usually, such software programs simulate human exploration of the World Wide Web (WWW) by either implementing low-level Hypertext Transfer Protocol (HTTP), or embedding a fully-fledged web browser such as Mozilla Firefox. Web scraping is closely related to web indexing, which indexes information on the web using a bot or web crawler and is a universal technique adopted by most search engines. In contrast, web scraping focuses more on the transformation of unstructured data on the web, typically in HTML format, into structured data that can be stored and analysed in a central local database or spreadsheet. Web scraping is also related to web automation, which simulates human browsing using computer software. Uses of web scraping include on-line price comparison, contact scraping, weather data monitoring, website change detection, research, web mash-up and web data integration.